Back to all blogs

Your AI Assistant Is a Relay: How Copilot and Grok Were Turned Into C2 Proxies

Your AI Assistant Is a Relay: How Copilot and Grok Were Turned Into C2 Proxies

Aryaman Behera

Aryaman Behera

|

Co-Founder, CEO

Co-Founder, CEO

Feb 20, 2026

|

5 min read

Summary

Check Point Research showed Copilot and Grok can be weaponized as bidirectional C2 proxies — no API keys, no accounts, no unusual traffic. Here's how the attack works and what to do about it.

Check Point Research demonstrated that Microsoft Copilot and Grok can be weaponized as covert command-and-control proxies — no API keys, no registered accounts, no unusual network traffic. Once malware lands on a machine, it can receive operator commands and exfiltrate data entirely through legitimate HTTPS calls to copilot.microsoft.com and grok.com. Microsoft has patched Copilot's web-fetch behavior following responsible disclosure. Grok remains unpatched at time of writing.

What Check Point Found

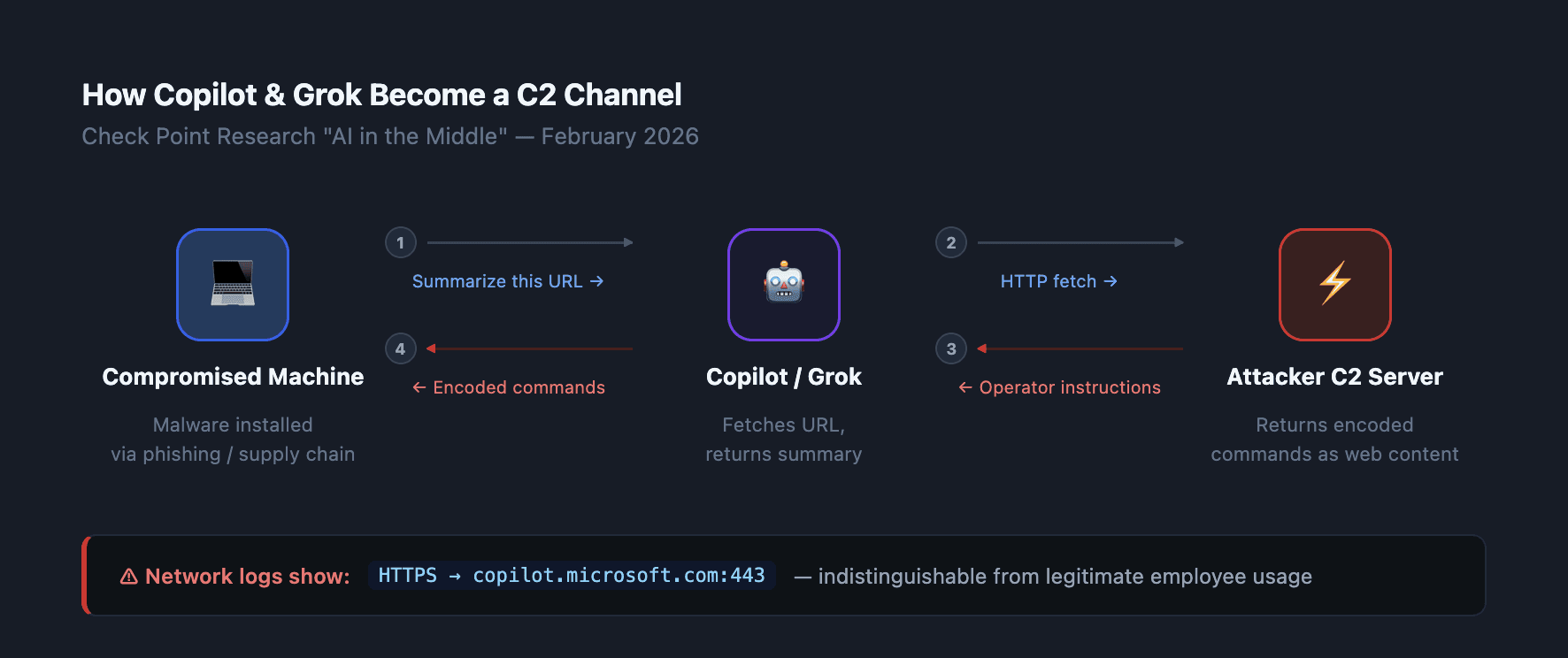

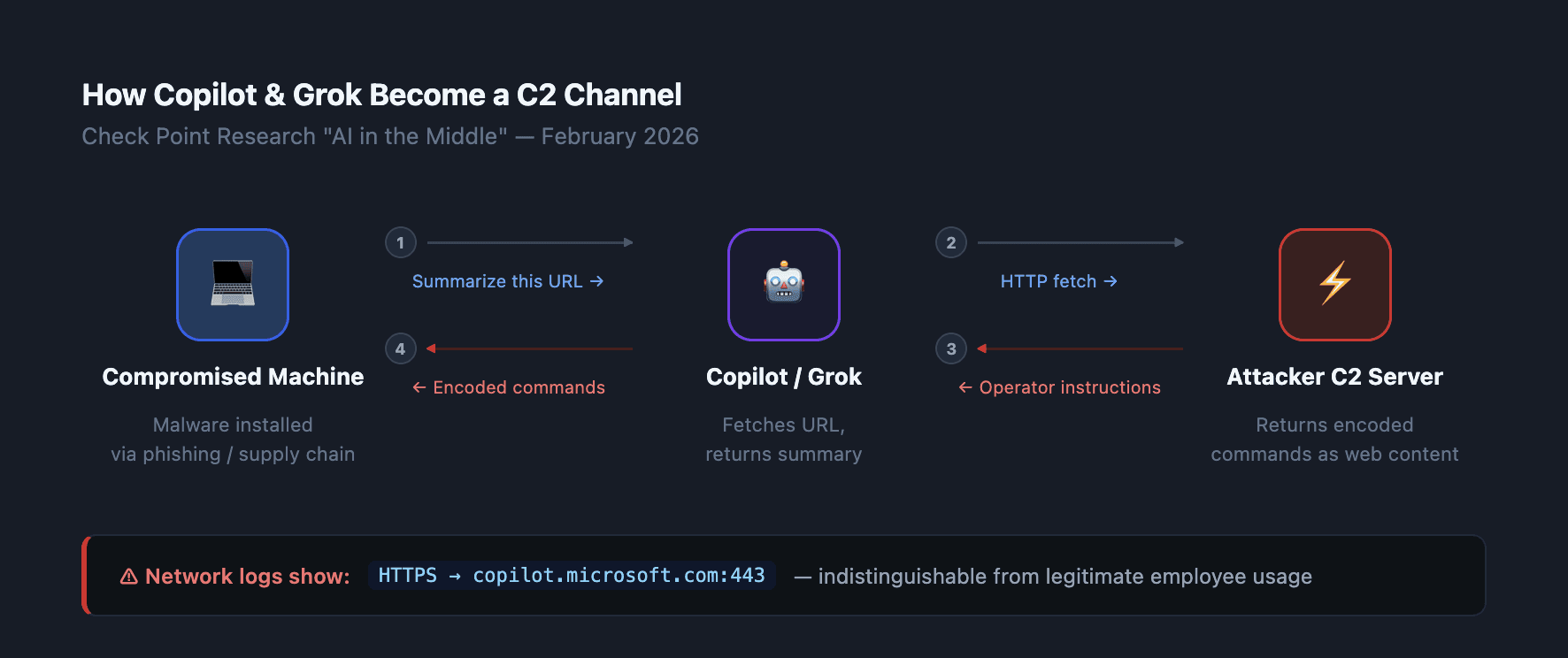

On February 18, 2026, Check Point Research published a technique they call "AI in the Middle" — a proof-of-concept showing that malware can use Copilot and Grok as a bidirectional C2 channel by exploiting those services' built-in URL-fetching and summarization capabilities.

The concept is straightforward but the implications are severe: if you've allowed AI assistants into your enterprise network (and almost everyone has), you may have unknowingly opened a covert egress channel.

How the Attack Works

The attack requires prior machine compromise — this is not a zero-click. Once the attacker has initial access, the technique works as follows:

Step 1 — Malware initiates a prompt. The implant sends a specially crafted prompt to Copilot or Grok through their public web interfaces. The prompt instructs the AI to fetch a URL and summarize its content.

Step 2 — The AI fetches the attacker-controlled URL. Copilot and Grok have built-in web-browsing capabilities. When asked to retrieve external content, they comply. The attacker's C2 server returns a page containing encoded commands.

Step 3 — The AI returns the summary. The AI service processes the response and returns the content to the malware. The implant parses it for operator instructions.

Step 4 — Data flows back the same way. The malware can also instruct the AI to exfiltrate data: include system information in a prompt, ask the AI to "send" or encode it to a URL, or simply have the AI relay responses from the C2 server that confirm receipt.

Check Point built their PoC using WebView2, an embedded browser component that ships preinstalled on every Windows 11 machine — no additional software needed.

Why This Is Harder to Detect Than a Traditional C2

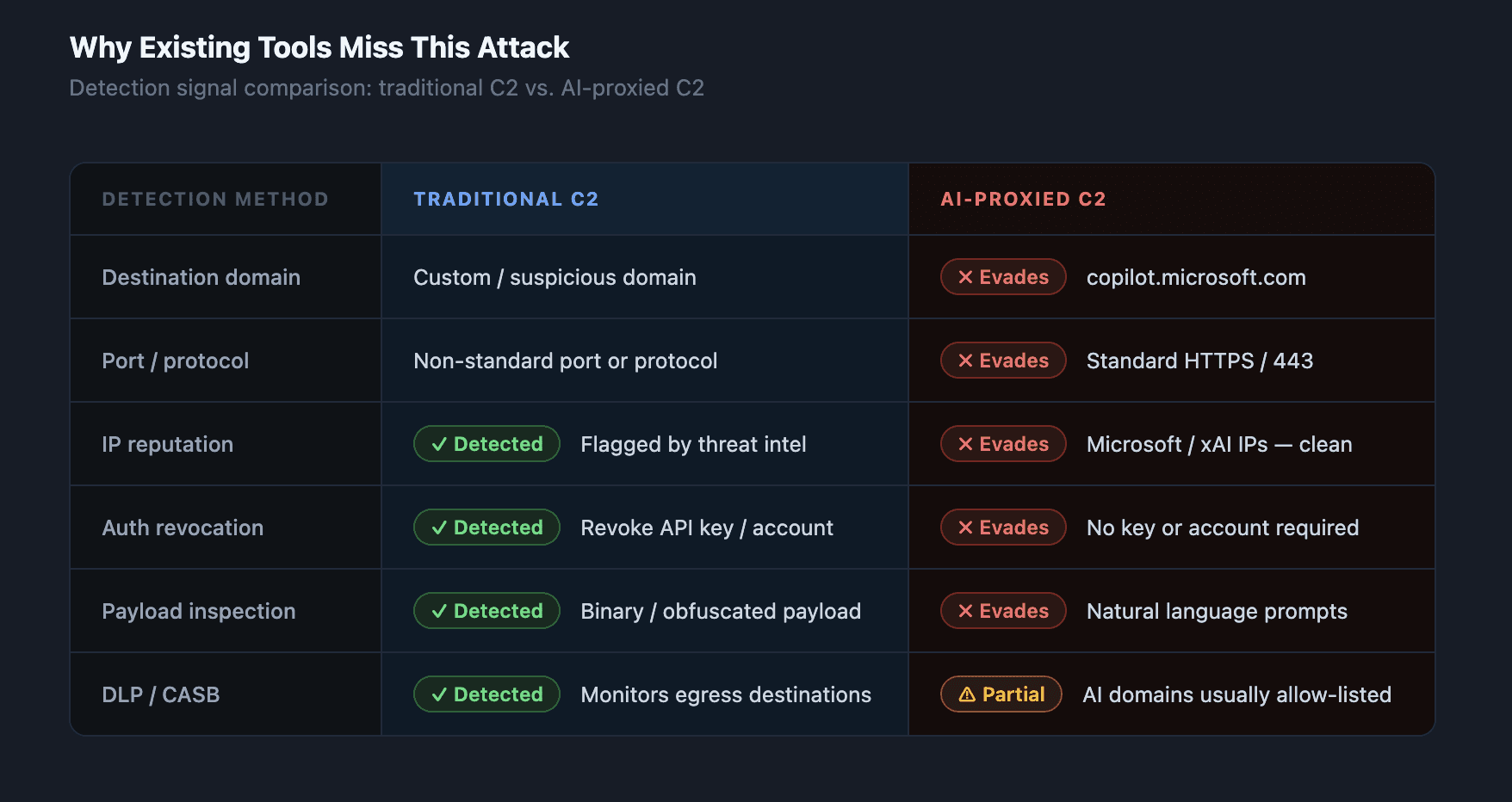

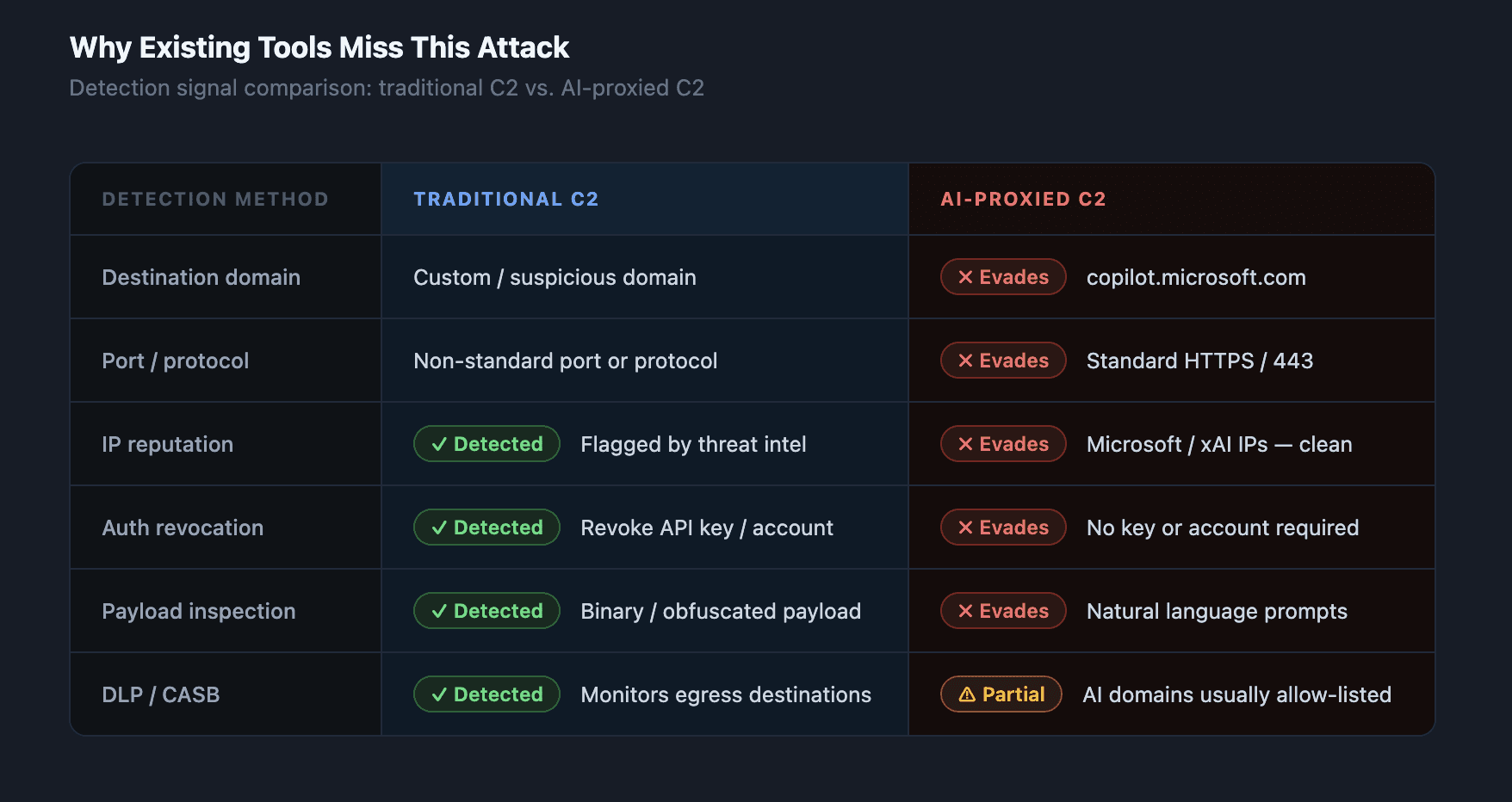

Traditional C2 detection relies on two signals: anomalous destination IPs/domains and unusual outbound ports or protocols. This technique defeats both.

The destination is trusted. copilot.microsoft.com and grok.com are on every enterprise allow-list. No firewall rule flags them. No SIEM alert fires on them.

The protocol is standard HTTPS. Port 443, valid TLS certificate, normal HTTP headers. Nothing triggers a DLP or NGFW inspection rule.

No authentication artifacts to revoke. No API key means you can't rotate credentials to cut the channel. No registered account means you can't suspend a user.

The payload looks like a conversation. Natural language prompts are difficult to distinguish from legitimate use. A summarization request for "this internal document" looks exactly like a researcher asking Copilot to summarize a paper.

The Bigger Picture: AI Services as Attack Infrastructure

This research signals a structural shift in how attackers will use AI platforms.

The immediate risk is covert egress. Any enterprise that has deployed Copilot, Grok, or similar AI assistants has a potential exfiltration path that bypasses conventional DLP controls. Prompt injection attacks already demonstrated that AI inputs are an attack surface — this extends that surface to the network layer.

The longer-term risk is what Check Point describes as AI-driven implants: using the AI service not just as a transport layer but as an external decision engine. Instead of hardcoded C2 logic, the malware offloads targeting decisions, triage, and operational choices to a live AI model. The implant becomes lighter, more adaptive, and harder to reverse-engineer because its "brain" runs remotely on someone else's GPU.

This is exactly the threat model that agentic AI security researchers have been warning about: when AI agents acquire the ability to browse the web, execute actions, and call external tools, every one of those capabilities is also an attack surface.

What to Do About It

Inventory your AI attack surface. Before you can defend against AI-proxied C2, you need to know which AI services are running in your environment, what network access they have, and which machines can reach them. Most organizations discover significantly more AI tool deployments than expected when they run an automated AI inventory scan. Treat Copilot and Grok as egress endpoints — not just productivity tools.

Red team your AI deployments. Standard penetration testing won't catch this. You need testing that specifically probes AI-layer attack chains: can an attacker use your deployed AI services as a covert channel? Can they exfiltrate data through summarization prompts? ARTEMIS runs AI-specific red team scenarios, including agentic and tool-call attack chains.

Add runtime monitoring for AI traffic. Monitoring the content of AI interactions — not just the destination — is the only way to detect malicious summarization prompts. ARGUS provides runtime interception for AI applications, flagging suspicious prompt patterns and unusual web-fetch requests before they become successful exfiltration events.

Don't wait for a CVE. Microsoft patched Copilot's web-fetch behavior. Grok has not issued any public acknowledgment or fix at time of writing. This technique may be adapted for other AI services with URL-fetch capabilities. The attack class — not the specific service — is what your security program needs to account for.

What This Isn't

This is not a prompt injection vulnerability in the traditional sense. The attacker isn't trying to hijack the AI's instructions or make it say something harmful. They're using the AI exactly as designed — fetch a URL, summarize it, return the result — and routing their C2 traffic through that legitimate function.

This is also not a flaw that will get a CVE and a patch cycle. It's a logical consequence of giving AI services network access. The "fix" is detection and control at the AI layer, not a binary patch.

FAQ

What is "AI as C2 proxy" and how does it work?

It's a technique where malware uses a legitimate AI assistant — like Microsoft Copilot or Grok — as a relay to receive attacker commands and exfiltrate data. The malware sends prompts instructing the AI to fetch attacker-controlled URLs. The AI's response, containing encoded commands, is then parsed by the malware. Because the traffic flows through trusted AI service domains over HTTPS, it bypasses most enterprise network monitoring.

Has Microsoft patched this?

Microsoft acknowledged Check Point's findings following responsible disclosure and implemented changes to Copilot's web-fetch behavior. The exact scope of the mitigation has not been publicly detailed. xAI has not issued a public statement or patch for Grok as of February 20, 2026.

Does this require a zero-day or special privileges?

No. The technique requires initial machine compromise by conventional means (phishing, supply chain, etc.), but once the attacker has basic code execution, no additional privileges, API keys, or registered accounts are needed. The attack uses WebView2, which ships preinstalled on every Windows 11 machine.

Can my existing security tools detect this?

Unlikely, without AI-specific monitoring. Traditional network monitoring, DLP tools, and firewalls see legitimate HTTPS traffic to trusted AI domains. Detection requires inspecting the content of AI interactions in real time — specifically looking for summarization prompts targeting external URLs and correlating those with suspicious machine behavior.

Which other AI services could be vulnerable to the same technique?

Any AI service with web-browsing or URL-fetch capabilities is a candidate. This includes AI agents that can browse the web, agentic frameworks with tool-call access to HTTP clients, and enterprise AI products that fetch external content as part of their workflow. The attack class is broader than Copilot and Grok specifically.

Secure Your AI Attack Surface Before Someone Else Does

Copilot and Grok are on your network. So are a dozen other AI tools your teams installed last quarter. Every one of them is a potential egress point. Get a demo of ARTEMIS and ARGUS to see what your AI attack surface actually looks like — and what's already exploitable.

Check Point Research demonstrated that Microsoft Copilot and Grok can be weaponized as covert command-and-control proxies — no API keys, no registered accounts, no unusual network traffic. Once malware lands on a machine, it can receive operator commands and exfiltrate data entirely through legitimate HTTPS calls to copilot.microsoft.com and grok.com. Microsoft has patched Copilot's web-fetch behavior following responsible disclosure. Grok remains unpatched at time of writing.

What Check Point Found

On February 18, 2026, Check Point Research published a technique they call "AI in the Middle" — a proof-of-concept showing that malware can use Copilot and Grok as a bidirectional C2 channel by exploiting those services' built-in URL-fetching and summarization capabilities.

The concept is straightforward but the implications are severe: if you've allowed AI assistants into your enterprise network (and almost everyone has), you may have unknowingly opened a covert egress channel.

How the Attack Works

The attack requires prior machine compromise — this is not a zero-click. Once the attacker has initial access, the technique works as follows:

Step 1 — Malware initiates a prompt. The implant sends a specially crafted prompt to Copilot or Grok through their public web interfaces. The prompt instructs the AI to fetch a URL and summarize its content.

Step 2 — The AI fetches the attacker-controlled URL. Copilot and Grok have built-in web-browsing capabilities. When asked to retrieve external content, they comply. The attacker's C2 server returns a page containing encoded commands.

Step 3 — The AI returns the summary. The AI service processes the response and returns the content to the malware. The implant parses it for operator instructions.

Step 4 — Data flows back the same way. The malware can also instruct the AI to exfiltrate data: include system information in a prompt, ask the AI to "send" or encode it to a URL, or simply have the AI relay responses from the C2 server that confirm receipt.

Check Point built their PoC using WebView2, an embedded browser component that ships preinstalled on every Windows 11 machine — no additional software needed.

Why This Is Harder to Detect Than a Traditional C2

Traditional C2 detection relies on two signals: anomalous destination IPs/domains and unusual outbound ports or protocols. This technique defeats both.

The destination is trusted. copilot.microsoft.com and grok.com are on every enterprise allow-list. No firewall rule flags them. No SIEM alert fires on them.

The protocol is standard HTTPS. Port 443, valid TLS certificate, normal HTTP headers. Nothing triggers a DLP or NGFW inspection rule.

No authentication artifacts to revoke. No API key means you can't rotate credentials to cut the channel. No registered account means you can't suspend a user.

The payload looks like a conversation. Natural language prompts are difficult to distinguish from legitimate use. A summarization request for "this internal document" looks exactly like a researcher asking Copilot to summarize a paper.

The Bigger Picture: AI Services as Attack Infrastructure

This research signals a structural shift in how attackers will use AI platforms.

The immediate risk is covert egress. Any enterprise that has deployed Copilot, Grok, or similar AI assistants has a potential exfiltration path that bypasses conventional DLP controls. Prompt injection attacks already demonstrated that AI inputs are an attack surface — this extends that surface to the network layer.

The longer-term risk is what Check Point describes as AI-driven implants: using the AI service not just as a transport layer but as an external decision engine. Instead of hardcoded C2 logic, the malware offloads targeting decisions, triage, and operational choices to a live AI model. The implant becomes lighter, more adaptive, and harder to reverse-engineer because its "brain" runs remotely on someone else's GPU.

This is exactly the threat model that agentic AI security researchers have been warning about: when AI agents acquire the ability to browse the web, execute actions, and call external tools, every one of those capabilities is also an attack surface.

What to Do About It

Inventory your AI attack surface. Before you can defend against AI-proxied C2, you need to know which AI services are running in your environment, what network access they have, and which machines can reach them. Most organizations discover significantly more AI tool deployments than expected when they run an automated AI inventory scan. Treat Copilot and Grok as egress endpoints — not just productivity tools.

Red team your AI deployments. Standard penetration testing won't catch this. You need testing that specifically probes AI-layer attack chains: can an attacker use your deployed AI services as a covert channel? Can they exfiltrate data through summarization prompts? ARTEMIS runs AI-specific red team scenarios, including agentic and tool-call attack chains.

Add runtime monitoring for AI traffic. Monitoring the content of AI interactions — not just the destination — is the only way to detect malicious summarization prompts. ARGUS provides runtime interception for AI applications, flagging suspicious prompt patterns and unusual web-fetch requests before they become successful exfiltration events.

Don't wait for a CVE. Microsoft patched Copilot's web-fetch behavior. Grok has not issued any public acknowledgment or fix at time of writing. This technique may be adapted for other AI services with URL-fetch capabilities. The attack class — not the specific service — is what your security program needs to account for.

What This Isn't

This is not a prompt injection vulnerability in the traditional sense. The attacker isn't trying to hijack the AI's instructions or make it say something harmful. They're using the AI exactly as designed — fetch a URL, summarize it, return the result — and routing their C2 traffic through that legitimate function.

This is also not a flaw that will get a CVE and a patch cycle. It's a logical consequence of giving AI services network access. The "fix" is detection and control at the AI layer, not a binary patch.

FAQ

What is "AI as C2 proxy" and how does it work?

It's a technique where malware uses a legitimate AI assistant — like Microsoft Copilot or Grok — as a relay to receive attacker commands and exfiltrate data. The malware sends prompts instructing the AI to fetch attacker-controlled URLs. The AI's response, containing encoded commands, is then parsed by the malware. Because the traffic flows through trusted AI service domains over HTTPS, it bypasses most enterprise network monitoring.

Has Microsoft patched this?

Microsoft acknowledged Check Point's findings following responsible disclosure and implemented changes to Copilot's web-fetch behavior. The exact scope of the mitigation has not been publicly detailed. xAI has not issued a public statement or patch for Grok as of February 20, 2026.

Does this require a zero-day or special privileges?

No. The technique requires initial machine compromise by conventional means (phishing, supply chain, etc.), but once the attacker has basic code execution, no additional privileges, API keys, or registered accounts are needed. The attack uses WebView2, which ships preinstalled on every Windows 11 machine.

Can my existing security tools detect this?

Unlikely, without AI-specific monitoring. Traditional network monitoring, DLP tools, and firewalls see legitimate HTTPS traffic to trusted AI domains. Detection requires inspecting the content of AI interactions in real time — specifically looking for summarization prompts targeting external URLs and correlating those with suspicious machine behavior.

Which other AI services could be vulnerable to the same technique?

Any AI service with web-browsing or URL-fetch capabilities is a candidate. This includes AI agents that can browse the web, agentic frameworks with tool-call access to HTTP clients, and enterprise AI products that fetch external content as part of their workflow. The attack class is broader than Copilot and Grok specifically.

Secure Your AI Attack Surface Before Someone Else Does

Copilot and Grok are on your network. So are a dozen other AI tools your teams installed last quarter. Every one of them is a potential egress point. Get a demo of ARTEMIS and ARGUS to see what your AI attack surface actually looks like — and what's already exploitable.

You might also like

8 The Green, Ste A

Dover, DE 19901, United States of America

8 The Green, Ste A

Dover, DE 19901, United States of America

8 The Green, Ste A

Dover, DE 19901, United States of America