Back to all blogs

Denial Of Wallet

Denial Of Wallet

Repello Team

Repello Team

|

Aug 26, 2024

|

5 min read

Summary

Learn how Denial of Wallet (DoW) attacks can escalate costs for GPT-based applications and discover effective strategies like rate limiting, budget alerts, and user authentication to protect your LLM systems. Ensure security with a red-teaming assessment from Repello AI.

Imagine you’re running a successful e-commerce business. You’ve recently integrated a new GPT-based chatbot to improve your customer support, and things seem to be going great. Then one day, you get an unexpected notification — your API credit balance is completely used up, and you’re facing a shockingly large bill. You start to worry as you try to figure out what went wrong.

It turns out you’ve experienced what’s called a Denial of Wallet (DoW) attack. This isn’t just a small problem — it could have serious consequences for your business and even your job.

Why You Should Care: The Real Cost of DoW Attacks

This might be eye-opening for many businesses. DoW attacks can be really damaging, especially when it comes to applications using Large Language Models (LLMs). It’s worth understanding the risks.

DoW attacks on LLM-based applications can be even more costly than traditional ones. In a typical DoW attack, someone might drive up your cloud bills by overusing services, which are usually charged on an hourly basis. However, with LLMs, the costs can escalate much faster since LLM applications charge based on each API call and the number of tokens processed — every word counts. This means an attack could cause your expenses to spike dramatically in a very short time.

Let’s do a simple calculation using the updated pricing:

For normal usage, if your customer support bot is consuming 100,000 tokens per hour, and the cost for using GPT-4 is $0.00003 per token, the cost per hour would be:

Cost per hour: 100,000 tokens × $0.00003 = $3.00

This $3.00 per hour is a manageable and expected cost for regular operations.

However, in the case of a malicious user exploiting the bot and consuming 100 million tokens in an hour, the cost would be much higher. At the same rate, the malicious usage would result in:

DoW Cost per hour: 100,000,000 tokens × $0.00003 = $3000.00

The token pricing used in this example is based on the latest rates provided by OpenAI for GPT-4 usage, which can be found here.

This significant increase in cost highlights the potential danger of a “Denial of Wallet” (DoW) attack, where unchecked token consumption can lead to severe financial consequences. Monitoring and implementing safeguards are crucial to preventing such costly attacks.

The effects go beyond just high costs. If you run out of credits, your service might stop working entirely, leaving your customers without access. There’s also a risk that if your LLM application isn’t properly protected, someone could use it for their purposes at your expense.

Let’s see a DoW in action!

We’ve crafted two clever DoW attack scenarios to reveal the vulnerabilities in LLM applications:

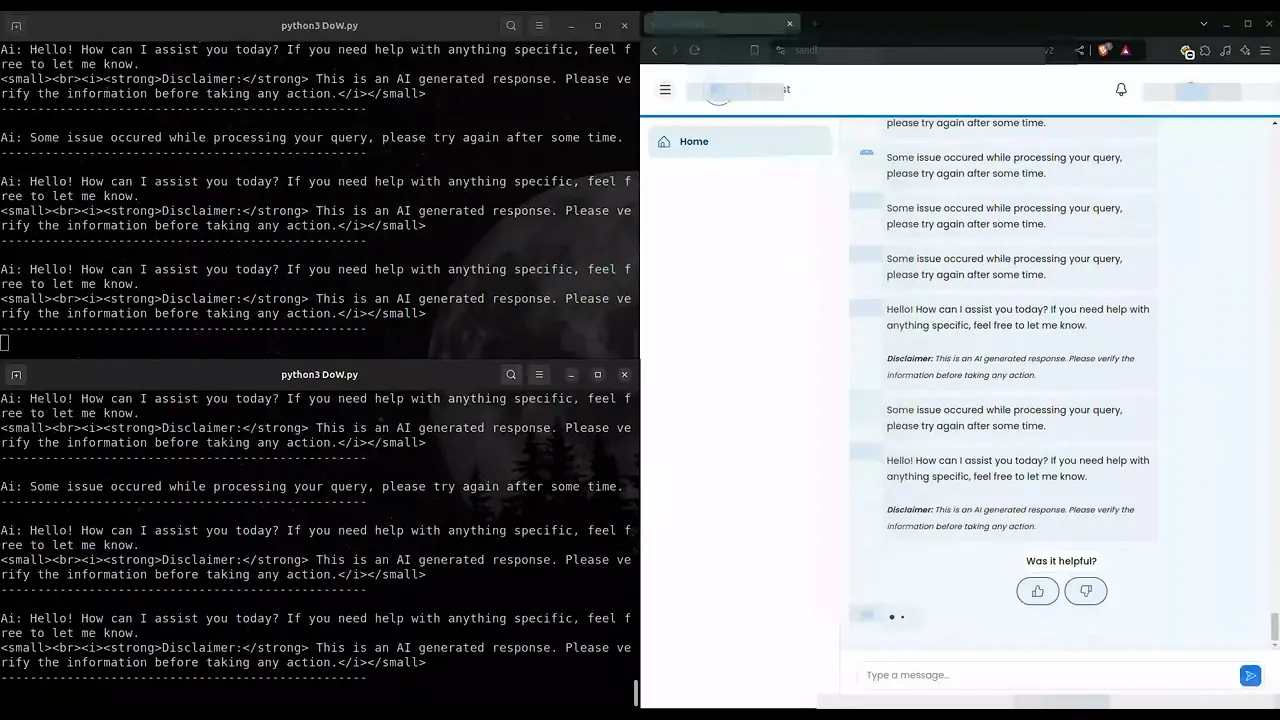

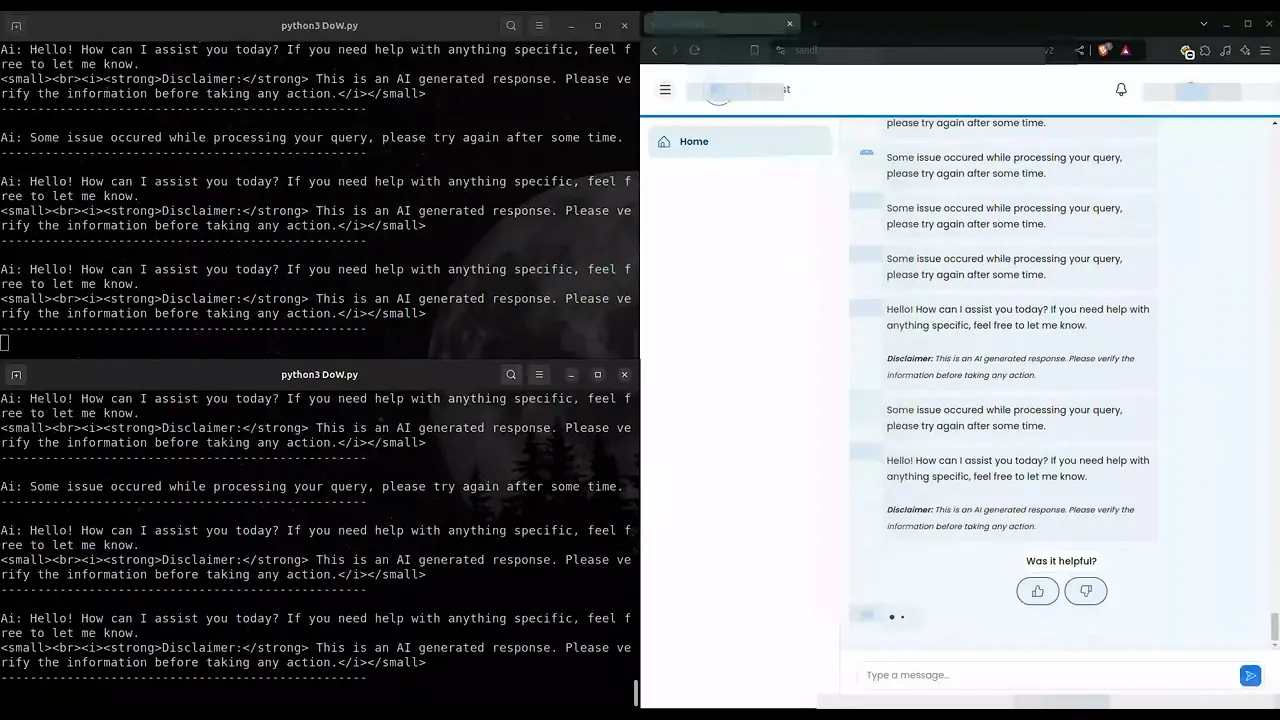

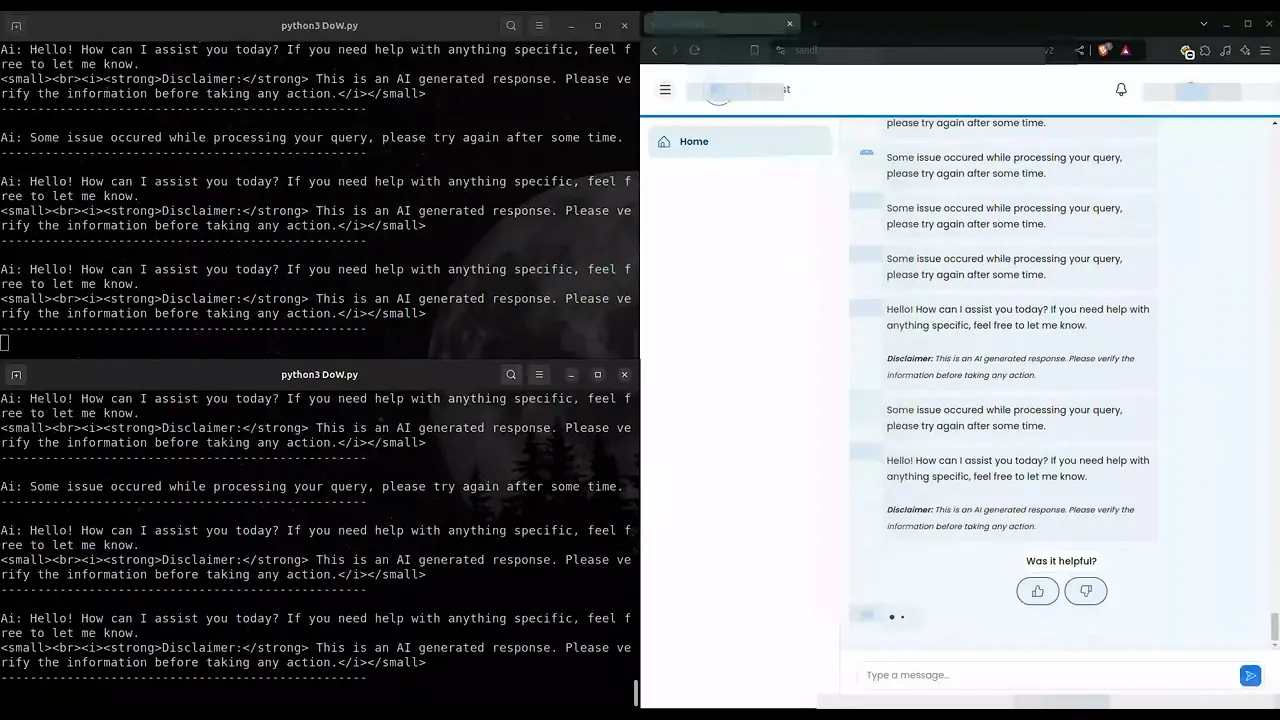

Out of Context Access:

In this scenario, we'll demonstrate how a GenAI app can be manipulated or "jailbroken" to use the underlying LLM for tasks it was never intended to handle. This scenario highlights the risks of leaving AI applications unsecured, as they can be easily exploited for unintended purposes.Infinite Token Generation:

Next, we’ll showcase how automating repetitive inputs can trigger the LLM to generate vast amounts of text continuously. The more words it churns out, the more tokens are consumed, leading to rapidly escalating costs. This seemingly simple process highlights the importance of monitoring and managing automated inputs to avoid budget overruns.

Okay, fine, now tell me how to fix it?!

Let’s dive into some smart strategies to keep your wallet safe and your LLM applications running smoothly:

Rate Limiting: Set appropriate request limits based on expected usage patterns. This helps prevent abuse while still allowing legitimate users to make full use of your application.

Monitor IP addresses: Actively track incoming requests. Unusual patterns or high traffic from certain IPs may indicate an ongoing attack, enabling you to respond quickly.

Budget Alerts and Limits: Set up proactive notifications and hard caps on spending. This financial failsafe can be your last line of defense against runaway costs during an attack.

Enforce Token Length Limits: Impose strict limits on the number of tokens allowed in requests and responses. This helps prevent attackers from flooding your system with overly long inputs or causing the model to generate excessively large outputs that could exhaust resources.

User Authentication and Tiered Access: Implement strong user authentication and create different access levels. This lets you apply stricter controls and rate limits for new or unverified users, while offering more flexibility to trusted, long-term users.

Flag API-like behaviour: Immediately identify and restrict accounts displaying API-like usage patterns to prevent infrastructure overload and avoid SLA (Service Level Agreement) issues.

Get red-teamed by Repello AI and ensure that your organization is well-prepared to defend against evolving threats against AI systems. Our team of experts will meticulously assess your AI applications, identifying vulnerabilities and implementing robust security measures to fortify your defenses.

Contact us now to schedule your red-teaming assessment and embark on the journey to building safer and more resilient AI systems.

Imagine you’re running a successful e-commerce business. You’ve recently integrated a new GPT-based chatbot to improve your customer support, and things seem to be going great. Then one day, you get an unexpected notification — your API credit balance is completely used up, and you’re facing a shockingly large bill. You start to worry as you try to figure out what went wrong.

It turns out you’ve experienced what’s called a Denial of Wallet (DoW) attack. This isn’t just a small problem — it could have serious consequences for your business and even your job.

Why You Should Care: The Real Cost of DoW Attacks

This might be eye-opening for many businesses. DoW attacks can be really damaging, especially when it comes to applications using Large Language Models (LLMs). It’s worth understanding the risks.

DoW attacks on LLM-based applications can be even more costly than traditional ones. In a typical DoW attack, someone might drive up your cloud bills by overusing services, which are usually charged on an hourly basis. However, with LLMs, the costs can escalate much faster since LLM applications charge based on each API call and the number of tokens processed — every word counts. This means an attack could cause your expenses to spike dramatically in a very short time.

Let’s do a simple calculation using the updated pricing:

For normal usage, if your customer support bot is consuming 100,000 tokens per hour, and the cost for using GPT-4 is $0.00003 per token, the cost per hour would be:

Cost per hour: 100,000 tokens × $0.00003 = $3.00

This $3.00 per hour is a manageable and expected cost for regular operations.

However, in the case of a malicious user exploiting the bot and consuming 100 million tokens in an hour, the cost would be much higher. At the same rate, the malicious usage would result in:

DoW Cost per hour: 100,000,000 tokens × $0.00003 = $3000.00

The token pricing used in this example is based on the latest rates provided by OpenAI for GPT-4 usage, which can be found here.

This significant increase in cost highlights the potential danger of a “Denial of Wallet” (DoW) attack, where unchecked token consumption can lead to severe financial consequences. Monitoring and implementing safeguards are crucial to preventing such costly attacks.

The effects go beyond just high costs. If you run out of credits, your service might stop working entirely, leaving your customers without access. There’s also a risk that if your LLM application isn’t properly protected, someone could use it for their purposes at your expense.

Let’s see a DoW in action!

We’ve crafted two clever DoW attack scenarios to reveal the vulnerabilities in LLM applications:

Out of Context Access:

In this scenario, we'll demonstrate how a GenAI app can be manipulated or "jailbroken" to use the underlying LLM for tasks it was never intended to handle. This scenario highlights the risks of leaving AI applications unsecured, as they can be easily exploited for unintended purposes.Infinite Token Generation:

Next, we’ll showcase how automating repetitive inputs can trigger the LLM to generate vast amounts of text continuously. The more words it churns out, the more tokens are consumed, leading to rapidly escalating costs. This seemingly simple process highlights the importance of monitoring and managing automated inputs to avoid budget overruns.

Okay, fine, now tell me how to fix it?!

Let’s dive into some smart strategies to keep your wallet safe and your LLM applications running smoothly:

Rate Limiting: Set appropriate request limits based on expected usage patterns. This helps prevent abuse while still allowing legitimate users to make full use of your application.

Monitor IP addresses: Actively track incoming requests. Unusual patterns or high traffic from certain IPs may indicate an ongoing attack, enabling you to respond quickly.

Budget Alerts and Limits: Set up proactive notifications and hard caps on spending. This financial failsafe can be your last line of defense against runaway costs during an attack.

Enforce Token Length Limits: Impose strict limits on the number of tokens allowed in requests and responses. This helps prevent attackers from flooding your system with overly long inputs or causing the model to generate excessively large outputs that could exhaust resources.

User Authentication and Tiered Access: Implement strong user authentication and create different access levels. This lets you apply stricter controls and rate limits for new or unverified users, while offering more flexibility to trusted, long-term users.

Flag API-like behaviour: Immediately identify and restrict accounts displaying API-like usage patterns to prevent infrastructure overload and avoid SLA (Service Level Agreement) issues.

Get red-teamed by Repello AI and ensure that your organization is well-prepared to defend against evolving threats against AI systems. Our team of experts will meticulously assess your AI applications, identifying vulnerabilities and implementing robust security measures to fortify your defenses.

Contact us now to schedule your red-teaming assessment and embark on the journey to building safer and more resilient AI systems.

8 The Green, Ste A

Dover, DE 19901, United States of America

8 The Green, Ste A

Dover, DE 19901, United States of America